Meta’s new moderation policy has been making waves across the digital landscape, and not all the ripples are good. In fact, Meta’s new moderation policy may be leading to more violent and bullying posts on Facebook, according to recent internal reports and third-party analysis. As the tech giant shifts its focus toward promoting “free expression,” questions are mounting about the real-world consequences of these changes.

While many support greater freedom of speech, experts warn that these relaxed rules could encourage harmful behavior, leaving users more exposed to hate speech, graphic violence, and online bullying. This article explores the policy changes, the data behind them, their impacts, and what professionals and everyday users can do to stay informed and protected.

Meta’s New Moderation Policy May Be Leading to More Violent and Bullying Posts on Facebook

| Topic | Details |

|---|---|

| Policy Change | Meta relaxed definitions of hate speech and violence in Jan 2025 |

| Violent Content Increase | Up from 0.06-0.07% in 2024 to 0.09% in Q1 2025 |

| Bullying Content | Increased to 0.07-0.08%, with March seeing a peak |

| Content Removals | Hate speech takedowns dropped to 3.4M (lowest since 2018) |

| Spam Removals | Fell by 50%, from 730M to 366M |

| Fake Account Removals | Dropped from 1.4B to 1B |

| New Moderation Tools | Shift from third-party fact-checking to crowd-sourced Community Notes |

| Oversight Criticism | Meta’s Oversight Board says changes were rushed |

| Official Report | Meta Transparency Center |

Meta’s intention to create a platform that embraces free speech is understandable. However, its new moderation policy appears to be leading to more violent and bullying posts on Facebook, raising alarms across sectors.

The data doesn’t lie: content once flagged as harmful is now slipping through the cracks. Whether you’re a casual user or a digital professional, it’s crucial to stay informed, vigilant, and proactive in navigating this evolving landscape.

Visit Meta’s Transparency Center to review the full report and stay updated on future changes.

Understanding Meta’s Policy Shift

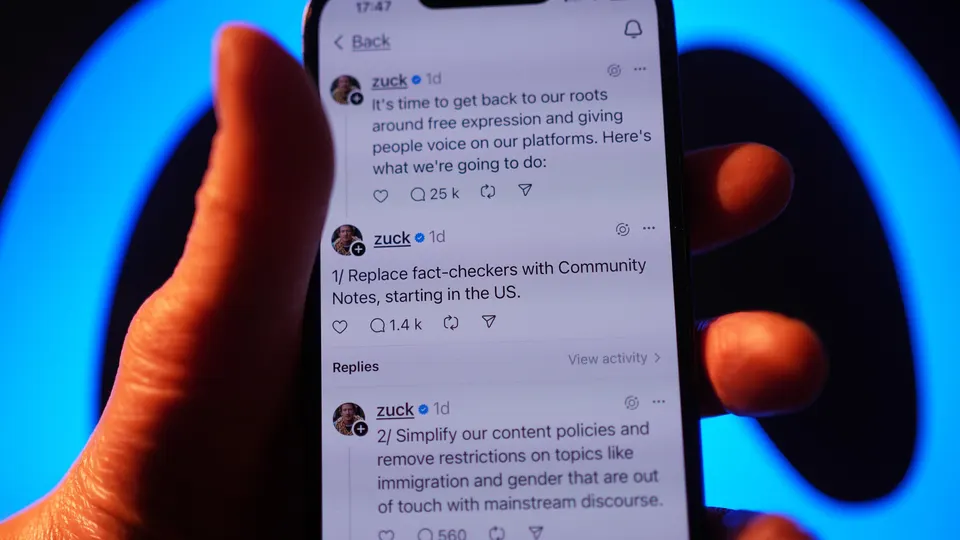

In January 2025, Meta announced a significant change to how it moderates content on Facebook. The goal? Promote “free expression” and reduce what the company described as “moderation errors” that previously led to the over-removal of content.

However, this policy shift also redefined hate speech and graphic content, narrowing its enforcement scope. Now, only direct attacks or explicitly dehumanizing language fall under removal policies. Other offensive content, such as expressions of exclusion, are no longer automatically flagged or removed.

This has sparked concern among researchers, civil rights groups, and even Meta’s own Oversight Board.

What the Data Shows

Let’s break down the actual figures to understand what’s happening:

Violent and Bullying Content on the Rise

According to Meta’s Report:

- Violent and graphic content rose from 0.06% to 0.09% of viewed content.

- Bullying and harassment content jumped to 0.08%, with March 2025 seeing the highest spike.

Major Decline in Content Removals

- Hate speech removals dropped to 3.4 million, the lowest since 2018.

- Spam went from 730 million to 366 million.

- Fake accounts removed dropped by 400 million.

The sharp reduction in enforcement actions suggests that less content is being flagged or taken down. While Meta claims this means fewer moderation errors, critics argue it signals a dangerous leniency.

How Community-Based Moderation Works

Instead of relying on professional moderators and AI alone, Meta introduced “Community Notes” – a user-driven system inspired by similar tools like X’s community fact-checks.

But this approach comes with risks:

- Lack of expertise: Everyday users might not recognize subtle forms of misinformation or hate speech.

- Bias potential: Votes can be manipulated by bad actors or organized groups.

- Delayed response: Harmful content can stay online longer before being addressed.

This change effectively places more responsibility on users to monitor and report harmful behavior.

Additional Concerns and Industry Reactions

Several digital rights organizations and tech ethics experts have raised red flags about Meta’s decision:

- The Electronic Frontier Foundation (EFF) noted that such shifts could set a precedent that other platforms may follow, potentially leading to a broader deterioration in content quality.

- UNESCO warned that social media platforms play a role in protecting digital civic space and must act responsibly.

- Former Meta employees voiced concerns anonymously to Wired, claiming internal teams were unprepared for the volume of harmful content left unchecked.

Additionally, advertisers are becoming more cautious, with some pulling back budgets due to brand safety concerns.

Case Study: The Impact on a School Community

At a high school in Illinois, a surge in Facebook bullying incidents following the policy changes prompted school administrators to hold emergency digital literacy workshops. Teachers reported students encountering harmful memes and aggressive comment threads, which escalated into real-life conflicts.

This example underscores how online moderation policies can directly influence real-world behavior, especially among impressionable users.

Comparative Perspective: How Other Platforms Handle It

To add more context, it’s useful to compare Meta’s approach to other major platforms:

- TikTok: Uses AI and human moderators with a focus on immediate takedowns and mental health flags.

- YouTube: Implements stricter community guidelines and educational prompts before viewing sensitive content.

- Reddit: Employs community moderation with strong subreddit-specific rules and volunteer moderators.

Meta’s reliance on user-led systems is unique and controversial, especially given its scale.

Real-Life Stories: Voices from the Community

Several users have started sharing their experiences with the platform post-policy change. One small business owner in Texas reported a spike in hateful comments on Facebook ads targeting minority communities, despite using existing brand safety filters. Another parent in the UK mentioned her teenager had to delete their account after being targeted by repeated harassment without receiving adequate platform support.

These testimonials highlight that while the policy aims to protect freedom of speech, it may be doing so at the expense of individual safety and well-being.

Why This Matters to Everyone

Whether you’re a parent, student, business owner, or content creator, these changes affect you.

For Parents:

Children using Facebook or Messenger Kids may now be more exposed to harmful content, including cyberbullying and hate speech.

For Businesses:

Brand reputation is at stake if your ads or posts appear next to offensive content.

For Professionals:

HR managers, educators, and therapists may see increased social media-related issues among employees, students, or clients.

Steps You Can Take

Here are some practical actions to protect yourself and others:

1. Adjust Your Privacy Settings

- Limit who can comment or send messages.

- Review and manage your activity log regularly.

2. Use Reporting Tools

- Facebook still allows users to report content.

- Reports are reviewed, though now with a potentially slower or lighter-touch response.

3. Educate Your Community

- Talk to kids, employees, or friends about identifying and avoiding toxic content.

- Encourage positive engagement and call out harmful behavior.

4. Monitor Brand Safety

- Use tools like Meta Business Suite to control where your ads appear.

- Regularly audit user-generated content on your brand’s pages.

5. Leverage External Tools

- Consider third-party software for content filtering or parental control.

- Nonprofits like Common Sense Media offer advice on managing digital exposure.

Google, Meta & X Respond to Delhi HC: What They’re Doing About Deepfakes

Texas Signs New App Store Law: Check How Age Checks Will Change on Apple and Google

DeepMind CEO Warns AI Will Impact Jobs in Next 5 Years— Youngsters Should Prepare Now

6. Engage with Advocacy Groups

- Join or follow organizations like the Center for Humane Technology or StopBullying.gov for ongoing resources and campaigns.

FAQs

Q1: Why did Meta change its moderation policy?

Meta says the goal was to promote free expression and reduce the number of false-positive content removals.

Q2: Are users more at risk now?

Yes, data shows a rise in harmful content, and with fewer takedowns, users may be more exposed to violence and bullying.

Q3: Can I still report harmful posts?

Absolutely. Use Facebook’s “Report Post” feature, although response times and outcomes may vary.

Q4: What does Meta’s Oversight Board say?

They criticized the policy changes as rushed and lacking human rights impact assessments.

Q5: What are “Community Notes”?

They’re crowdsourced notes attached to posts that users find misleading or harmful, similar to fact-checking but done by peers.

Q6: Can businesses opt out of ad placements next to sensitive content?

Yes. Meta allows advertisers to manage placements via brand safety settings in Meta Ads Manager.

Q7: Are there tools for tracking moderation trends?

Yes. Tools like CrowdTangle (owned by Meta) and Graphika offer insights on how content spreads and is moderated.

Q8: What does academic research say about moderation policies?

Studies from institutions like Stanford and MIT suggest that relaxed content moderation often leads to increased polarization, misinformation, and online harassment, particularly in political or cultural debate zones.